Imagine being able to manipulate your favourite celebrity into saying whatever you want them to say. This is now reality. Deepfakes are the latest threat to privacy and the source of potentially dangerous misinformation in a video format.

What is a “deepfake”?

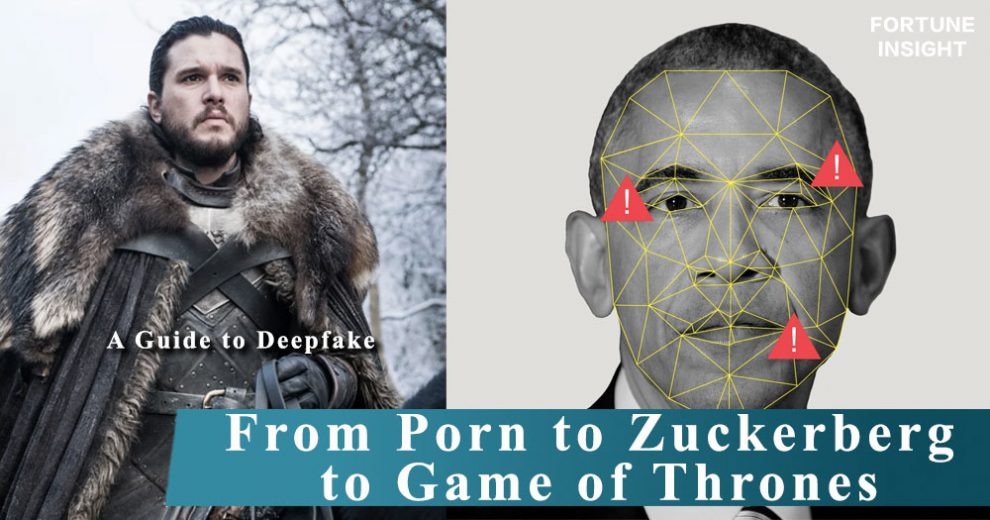

A “deepfake” is an AI-manipulated video that makes people say something they never did, while maintaining the exact same appearance and voice to the real subject it is trying to superimpose onto the video. It is a relatively new technology, with its first appearance beginning in 2017 on Reddit. Deepfake is the portmanteau of “deep-learning” and “fake”. Using deep-learning, you can train AI on hours of footage to produce realistic fake videos with seamless mouth, head movements, and appropriate coloration.

Why is it dangerous?

Deepfakes are high quality, and easily and quickly created by anyone. They are highly realistic, so viewers often have difficulty realising that the figures in deepfakes are, in fact, not real. So far, pornography and other videos with influential figures have been made, attracting millions of views. Victims of deepfake pornogrpahy include huge Hollywood stars, such as, Scarlett Johansson, and Gal Gadot whose faces are swapped with the actresses in the videos. There is also the appearance of “revenge porn” made with ex-lovers’ faces. Socially, deepfakes are abhorrent especially for those involved.

However, it also has the uncanny ability to disrupt politics in 2019. Influential figures, like Obama, US Senator Nancy Pelosi, and Mark Zuckerberg are some of the recent victims of deepfake.

In the Zuckerberg deepfake, with all the mannerisms and voice of the real person, he says, “imagine this for a second: one man with total control of billions of stolen data…”.

This was posted on Facebook, and an Internet meltdown ensued.

See deepfake in action for yourself:

Government Response

Currently, researchers are scrambling to produce a detection software, but there are more deepfake videos than they could keep up due to how easy they could be produced. There are three issues with deepfake: the problem of consent, the potential for blackmail, and the application of the technology to those in power.

The House Intelligence Committee has this to say to counter these issues, “(tech companies should) put in place policies to protect users from misinformation before the 2020 elections.”

Text by Fortune Insight

Source: Multiple